FLUX 1.1 Pro: A Comprehensive Guide

FLUX 1.1 Pro, the latest advancement in generative AI technology developed by Black Forest Labs, is now available through the Replicate Node in AI-FLOW. In this guide, we'll explore how FLUX 1.1 Pro can revolutionize your projects, how to run it, and how it compares to other popular models like its predecessor, FLUX Pro, and Stable Diffusion 3.

Why Choose FLUX 1.1 Pro?

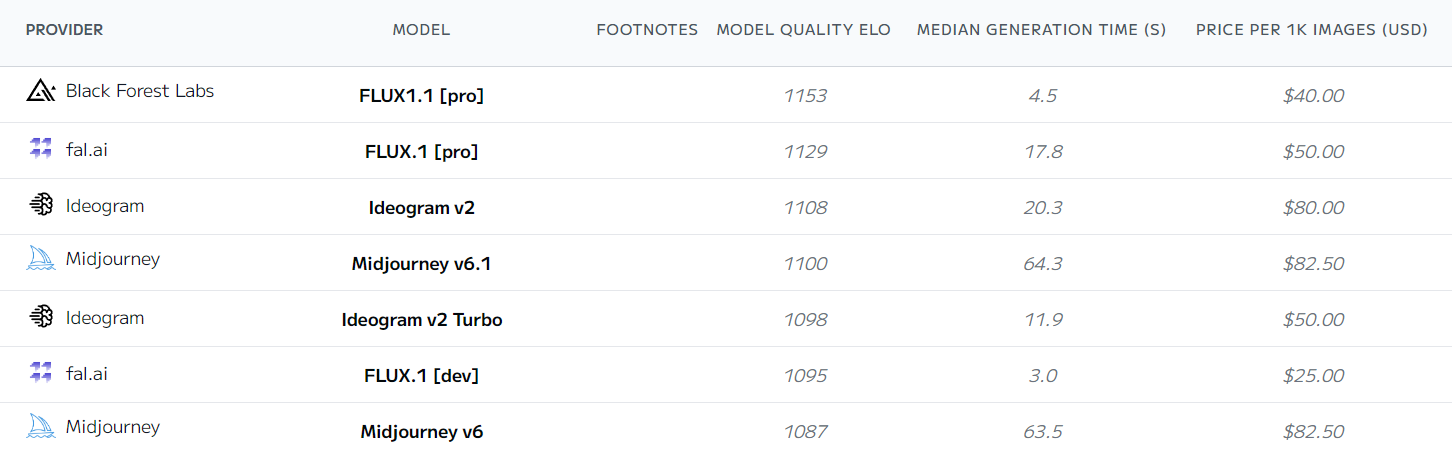

FLUX 1.1 Pro is three times faster than FLUX Pro, offering significant improvements in image quality, prompt adherence, and diversity. It sets a new standard in AI-driven image creation, making it an excellent choice for both seasoned developers and beginners across a range of applications. FLUX 1.1 Pro is currently the best text-to-image model available.

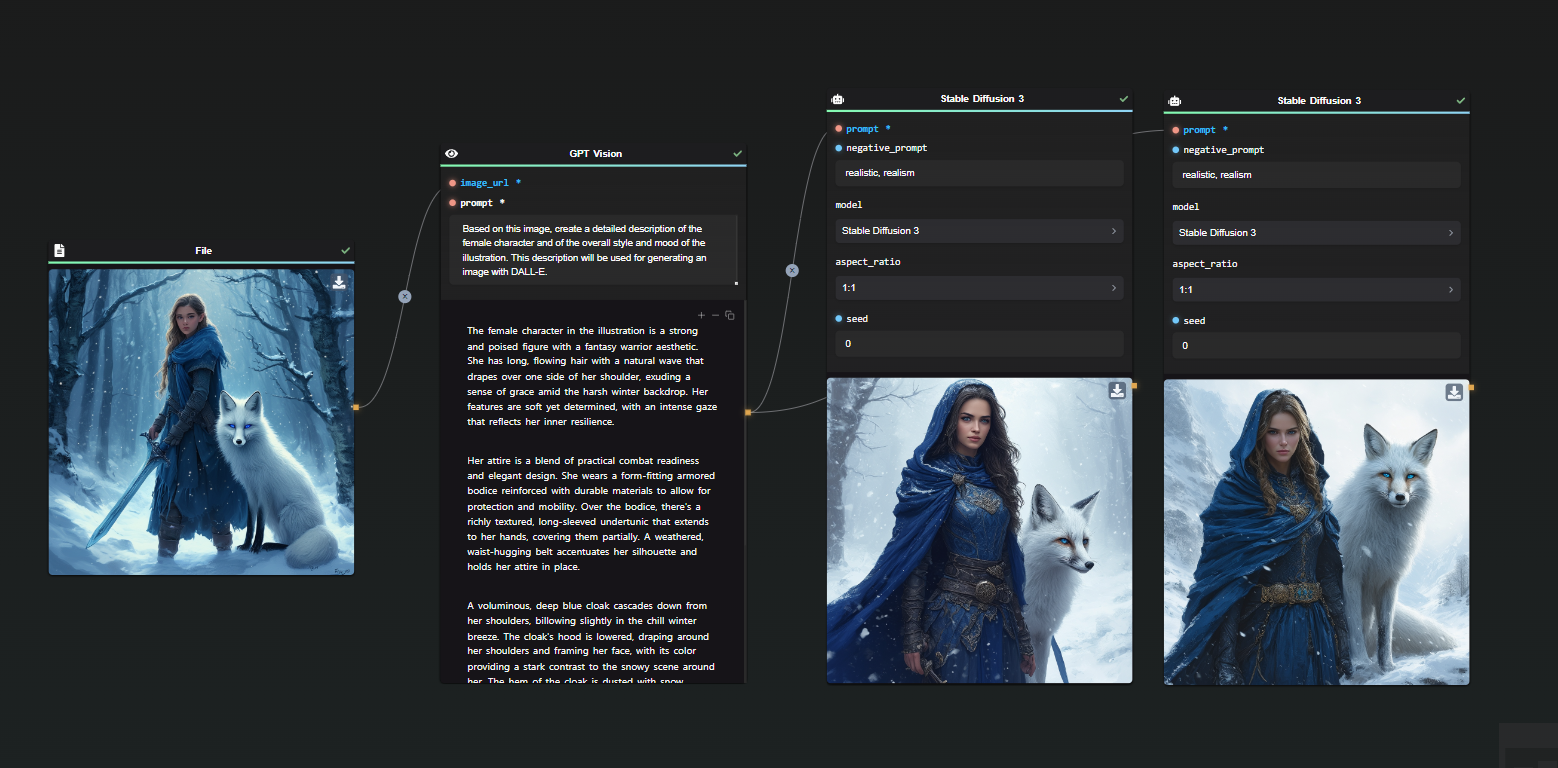

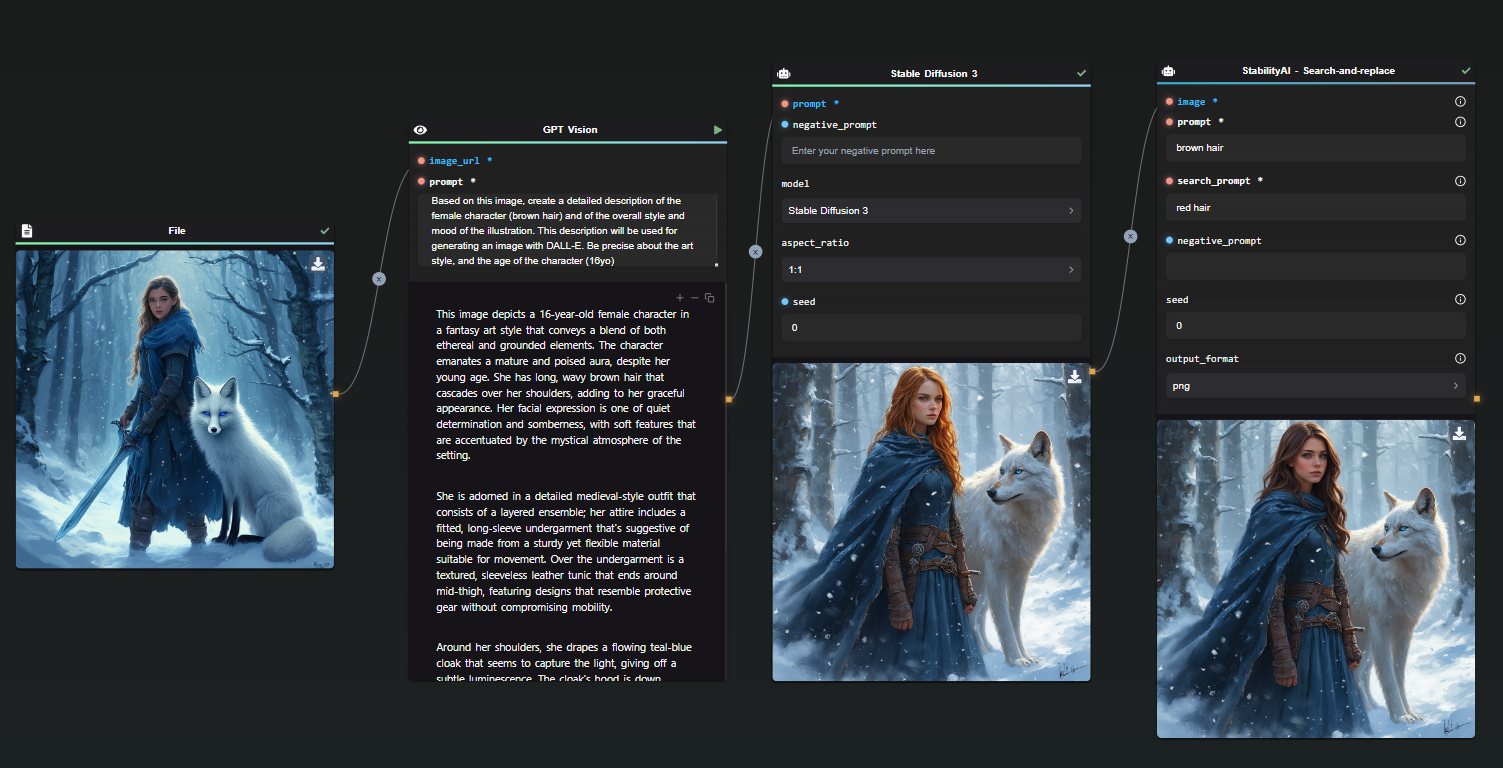

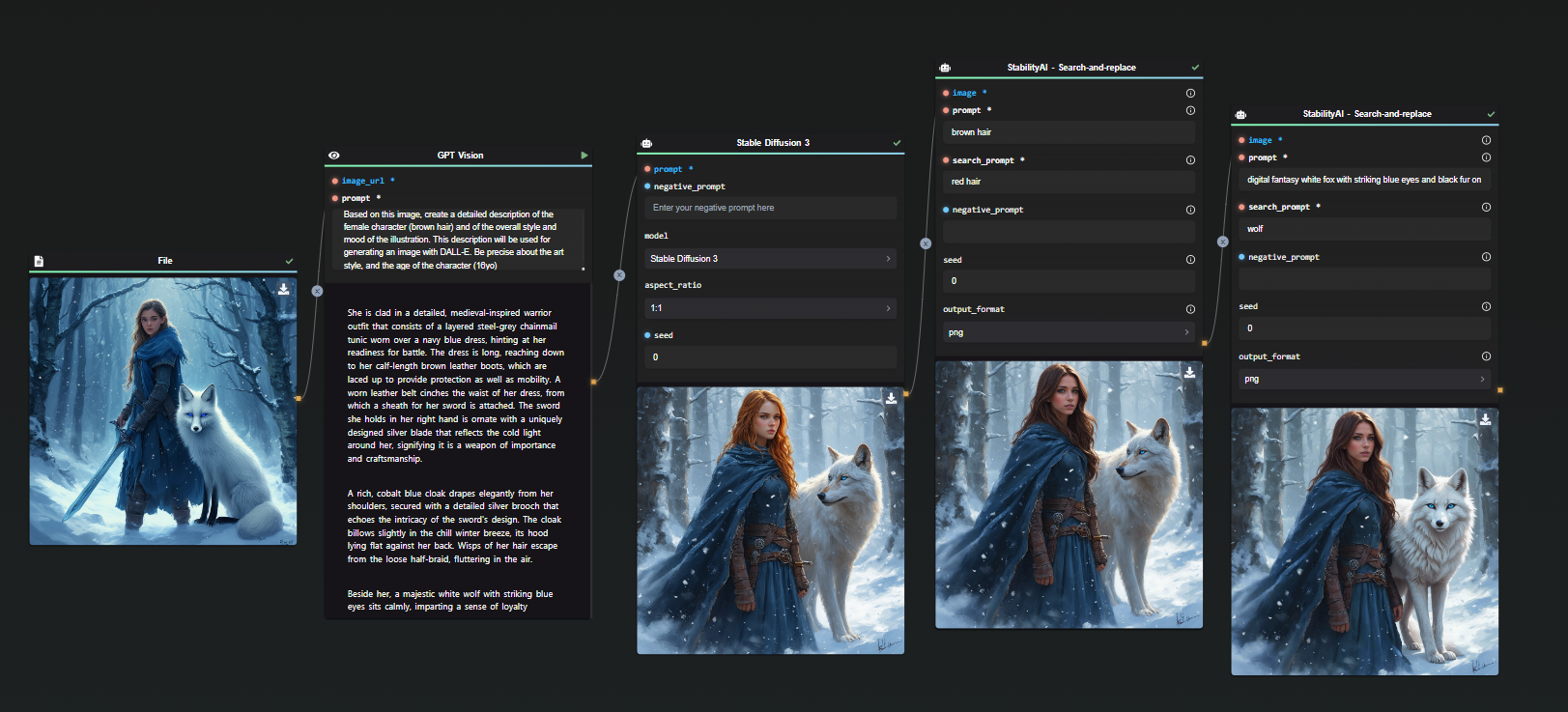

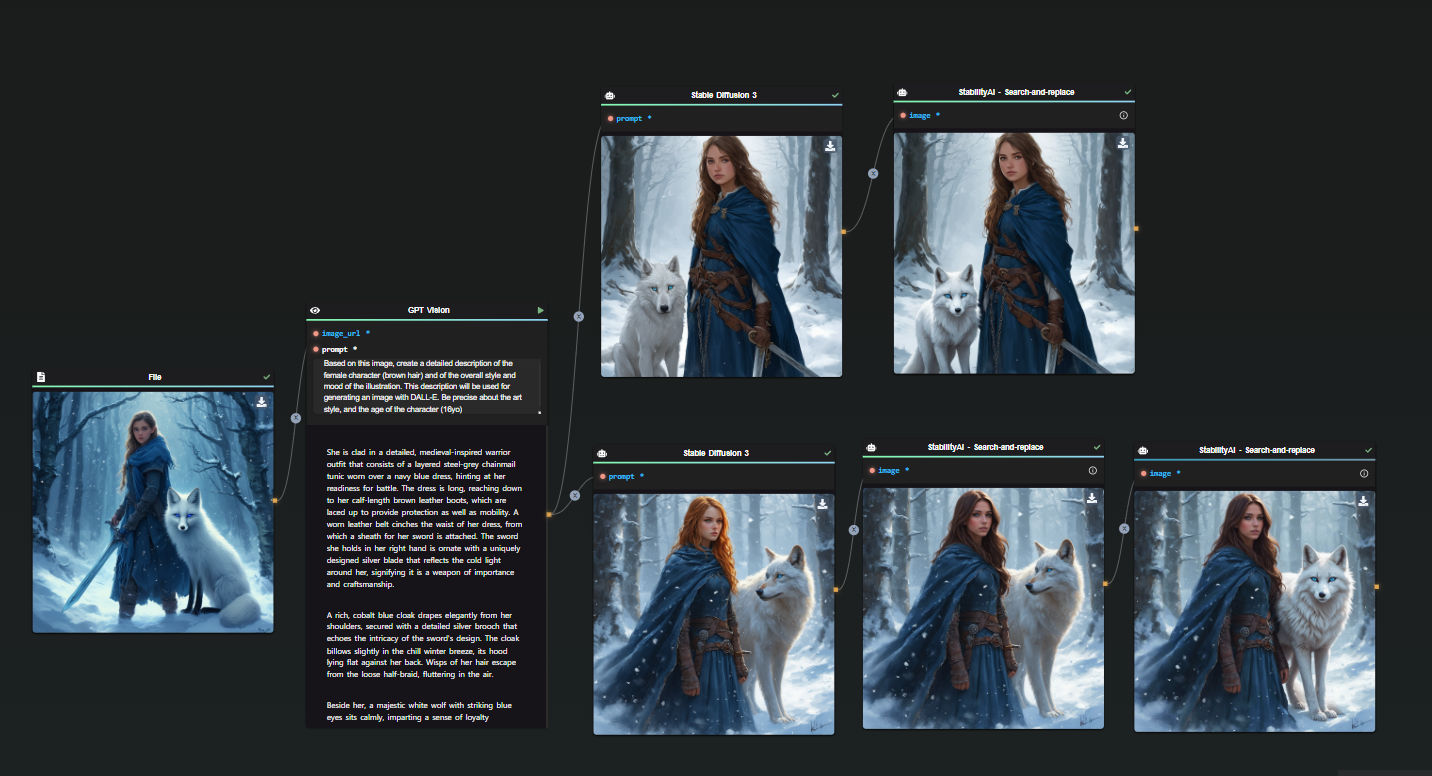

Comparing FLUX 1.1 Pro to FLUX Pro and Stable Diffusion

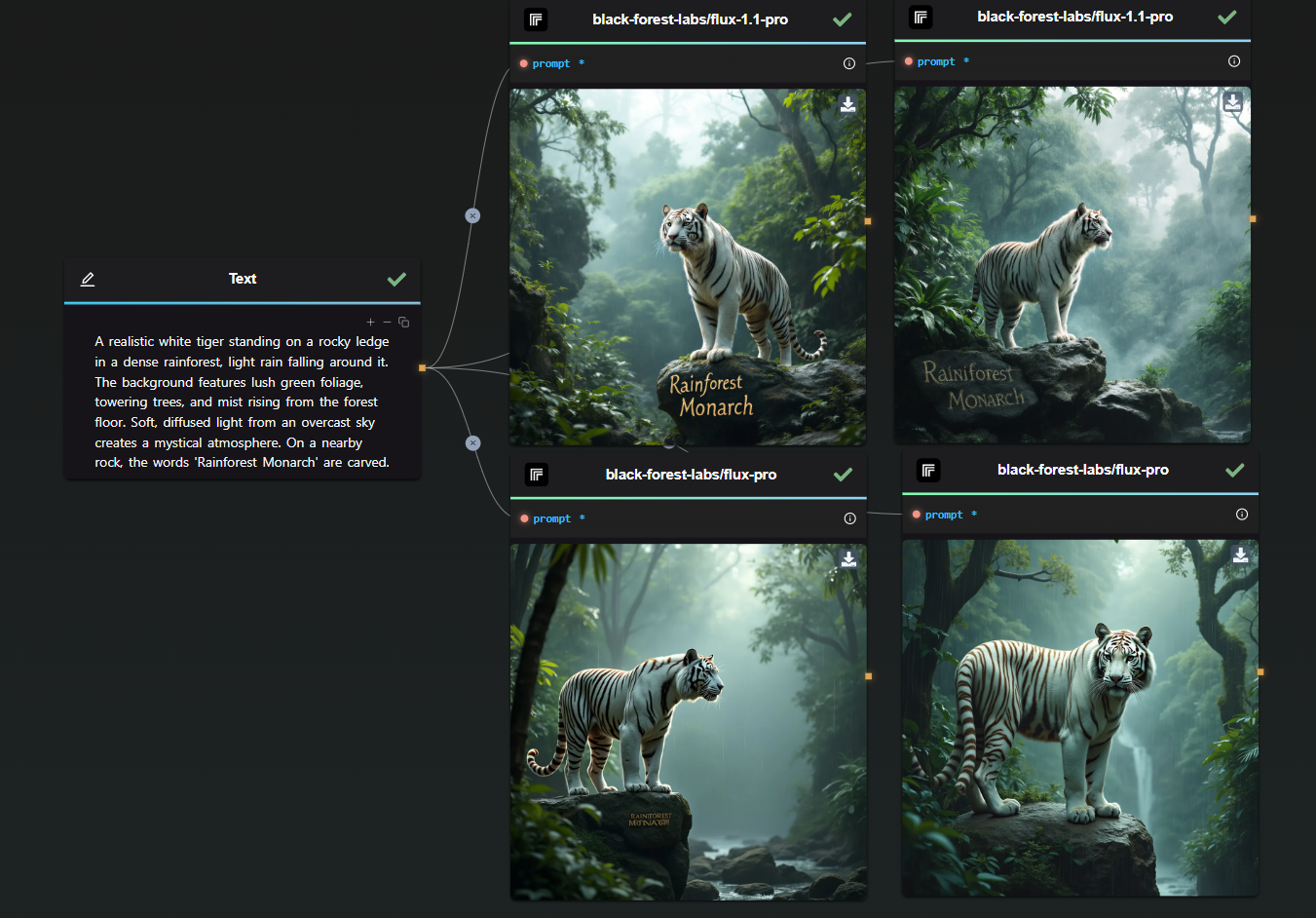

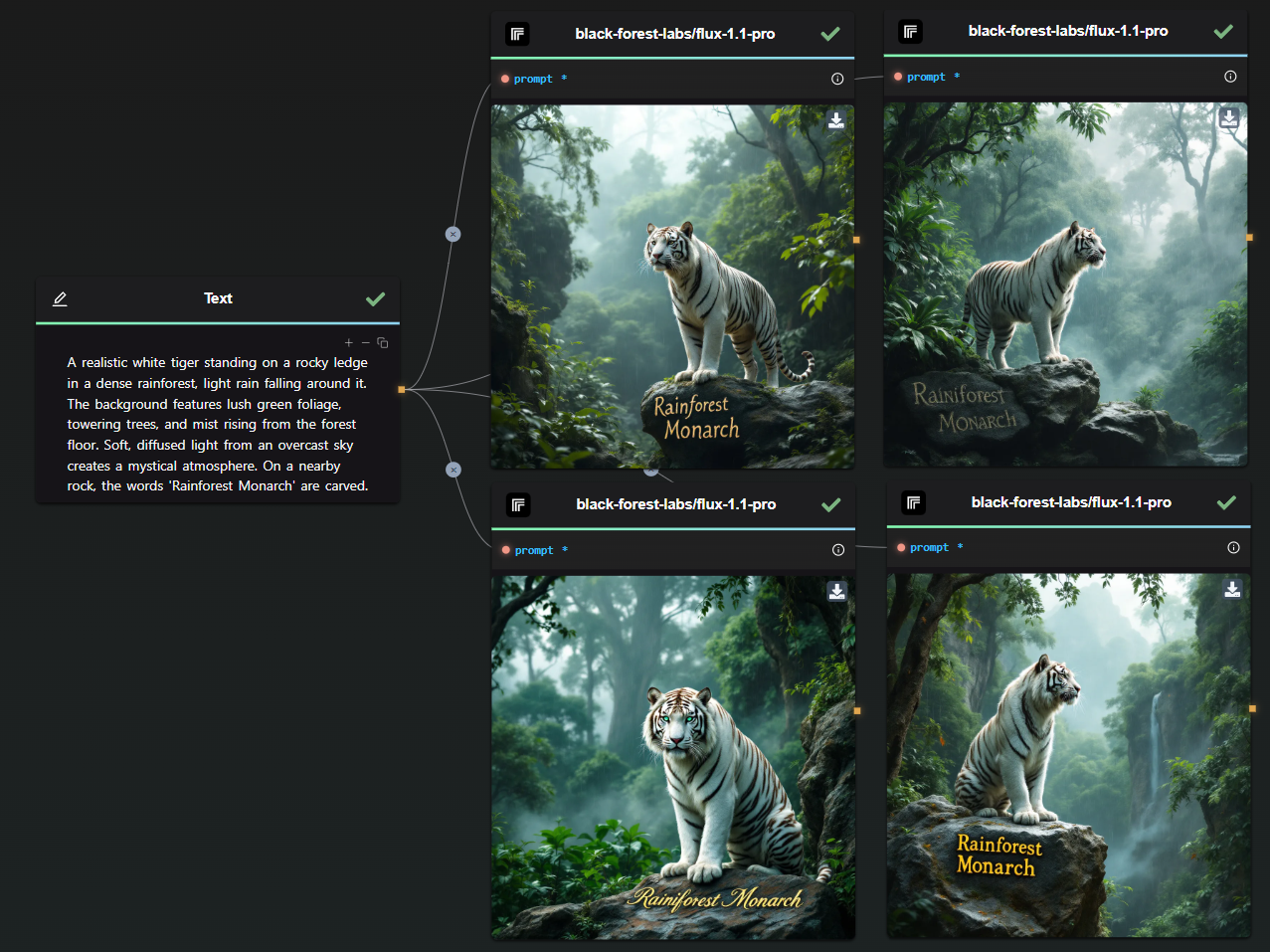

Choosing an AI model requires understanding how it measures up to other available options. Let’s use a sample prompt to illustrate the capabilities of these models:

A realistic white tiger standing on a rocky ledge in a dense rainforest, light rain falling around it. The background features lush green foliage, towering trees, and mist rising from the forest floor. Soft, diffused light from an overcast sky creates a mystical atmosphere. On a nearby rock, the words 'Rainforest Monarch' are carved.

This prompt provides enough elements to thoroughly evaluate each model's precision and creativity.

FLUX 1.1 Pro vs. FLUX Pro

In the comparison below, FLUX 1.1 Pro is at the top, while FLUX Pro is at the bottom.

The difference is clear: FLUX 1.1 Pro generates a more realistic-looking tiger with a richly detailed background, resulting in a more immersive scene. FLUX Pro, on the other hand, missed the text prompt in one of its generations.

Note: Each model was given a single attempt—no retakes, no cherry-picking.

Speed: FLUX 1.1 Pro is three times faster than FLUX Pro, making it the ideal choice for time-sensitive projects.

Image Quality: Improved prompt adherence and diversity mean FLUX 1.1 Pro produces superior images compared to FLUX Pro.

Cost: Priced at just 4 cents per image, FLUX 1.1 Pro offers a cost-effective solution for high-quality image generation.

Prompt Upsampling: FLUX 1.1 Pro includes an optional prompt upsampling feature for enhanced image generation. (not enabled for the test)

Custom Ratios: It allows more flexibility in aspect ratio customization than its predecessor.

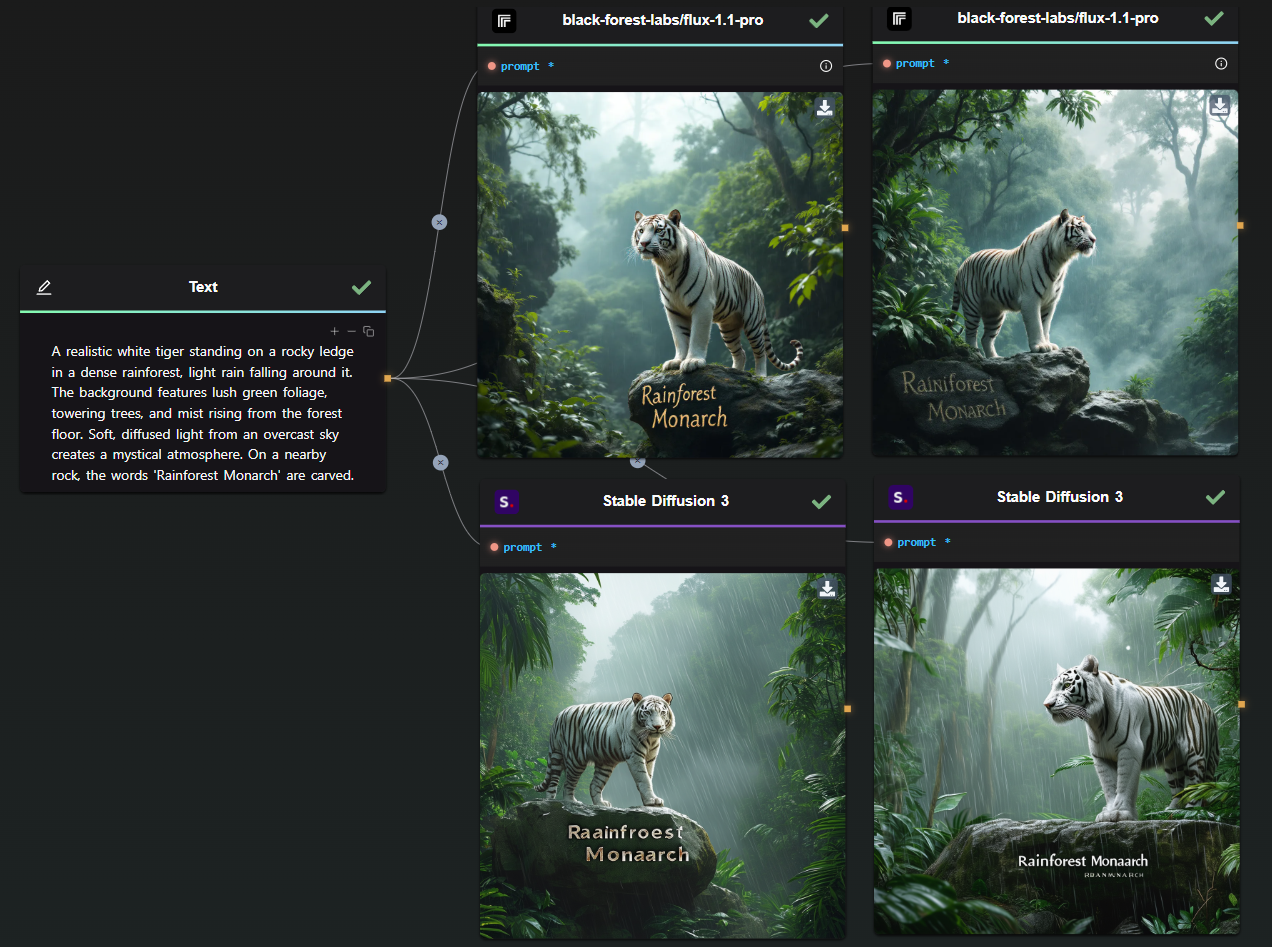

FLUX 1.1 Pro vs. Stable Diffusion 3 Large

Again, this was a one-shot generation for each model. The results speak for themselves—FLUX 1.1 Pro significantly outperforms Stable Diffusion 3.

- Performance: FLUX 1.1 Pro is faster and generates higher-quality images, especially in high-resolution settings.

- Customization: Offers advanced customization options, providing greater control over output compared to Stable Diffusion.

- Limitations: FLUX 1.1 Pro currently lacks an image-to-image feature.

- Overall Quality: FLUX 1.1 Pro consistently delivers more precise and visually appealing results.

FLUX 1.1 Pro with Prompt Upsampling

For curiosity’s sake, here’s a comparison with prompt upsampling enabled:

By analyzing the outcome, we can infer what has been added during the upsampling process:

First Image: The focus here is on the tiger's deep, unrealistic teal eyes, giving it a mythical quality. There is a new kind of brown texture on the rock, making it appear less perfect and more integrated into the environment. I also suspect that the upsampling added the large tree in the background.

Second Image: In this version, the tiger's position appears more defined. I believe the upsampling introduced the waterfall in the background, as well as the silhouette of a mountain. Additionally, the area around the tiger's head is less cluttered, making it the focal point in the now more open space. The rock also features additional texture.

In conclusion, prompt upsampling is a fascinating tool that can add significant detail, realism, and improved composition compared to a standard prompt used by someone less experienced. However, the downside is the unpredictability of the direction in which upsampling will take the image.

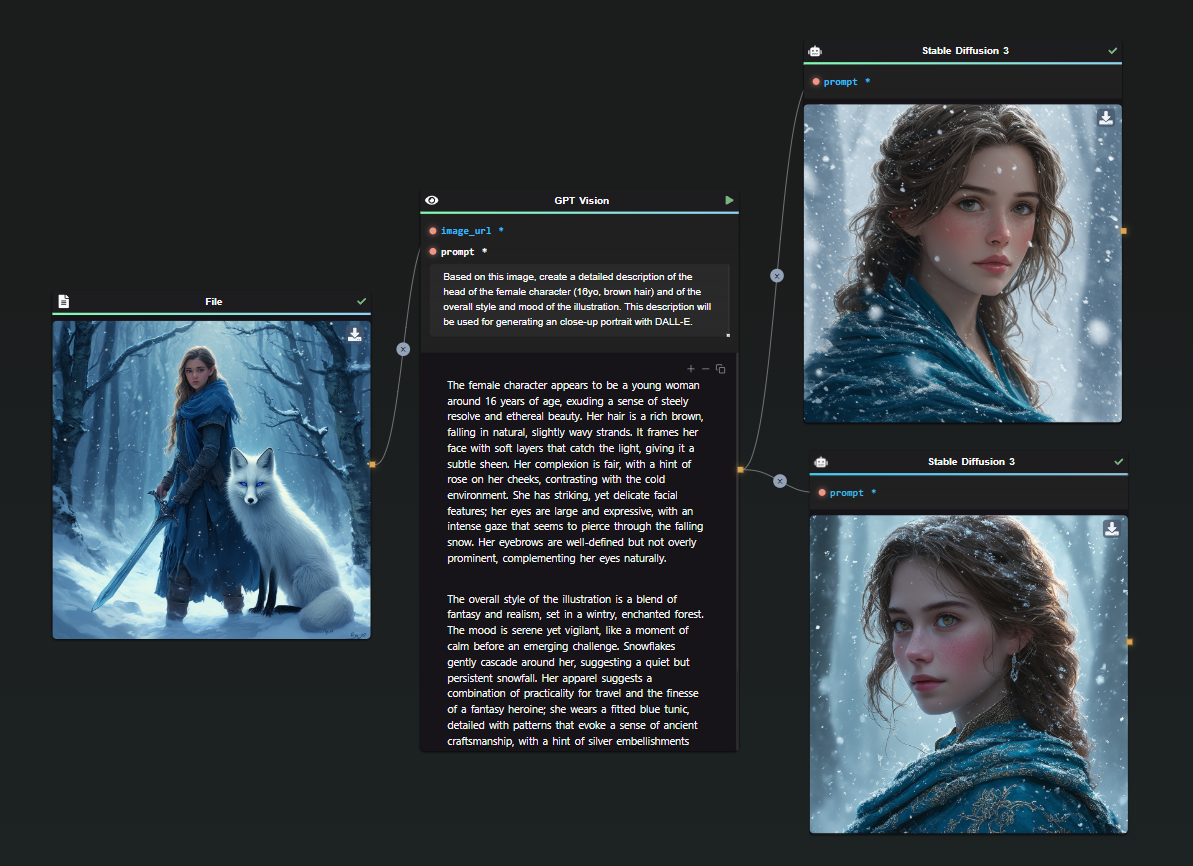

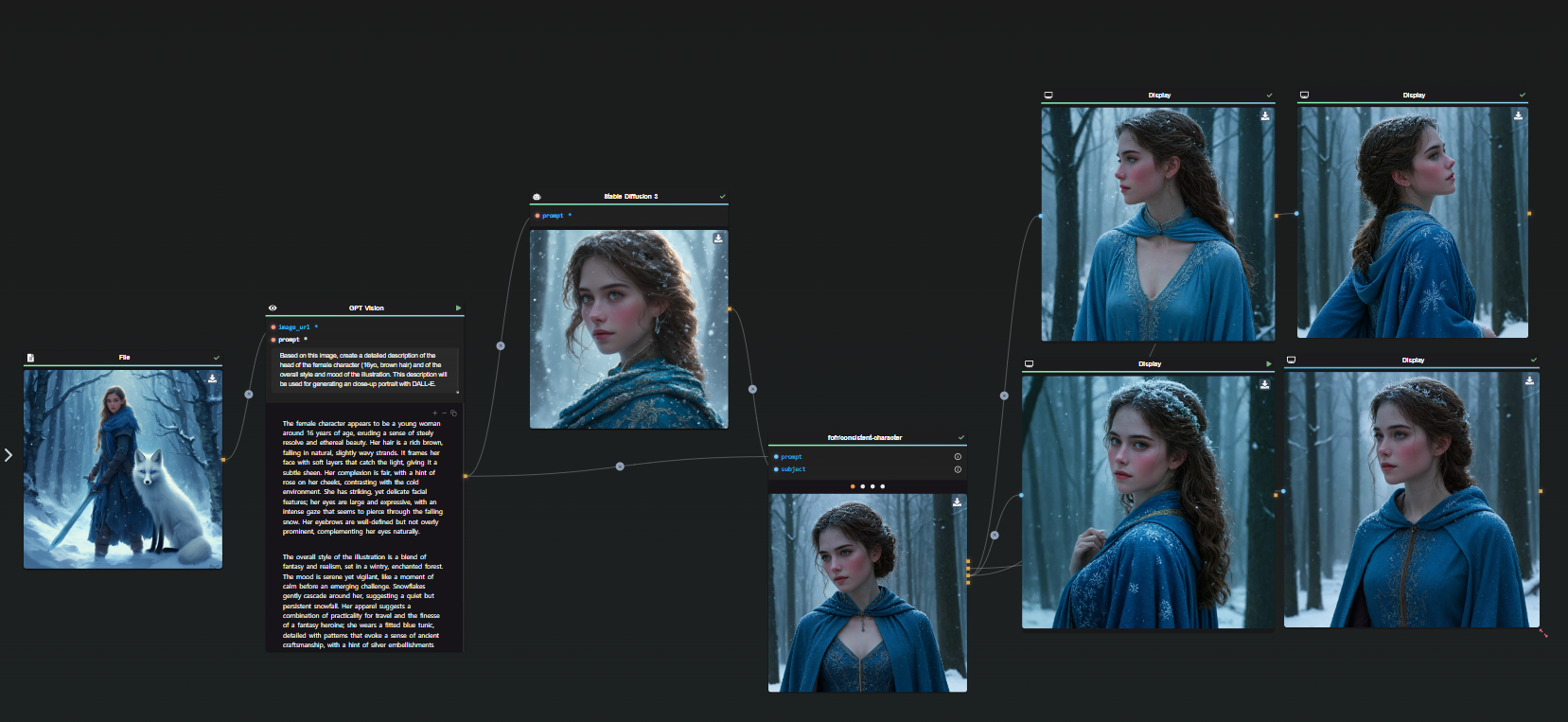

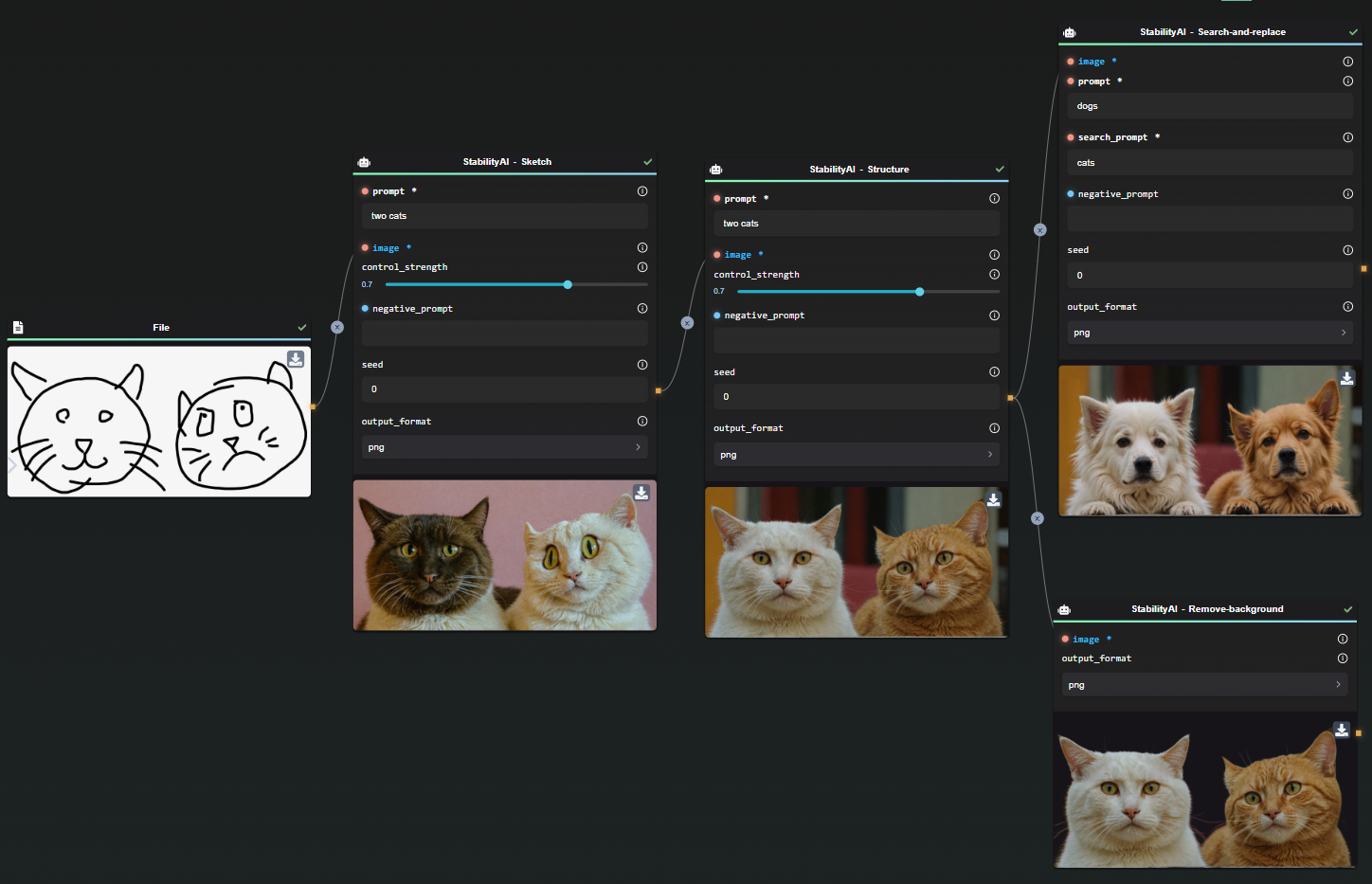

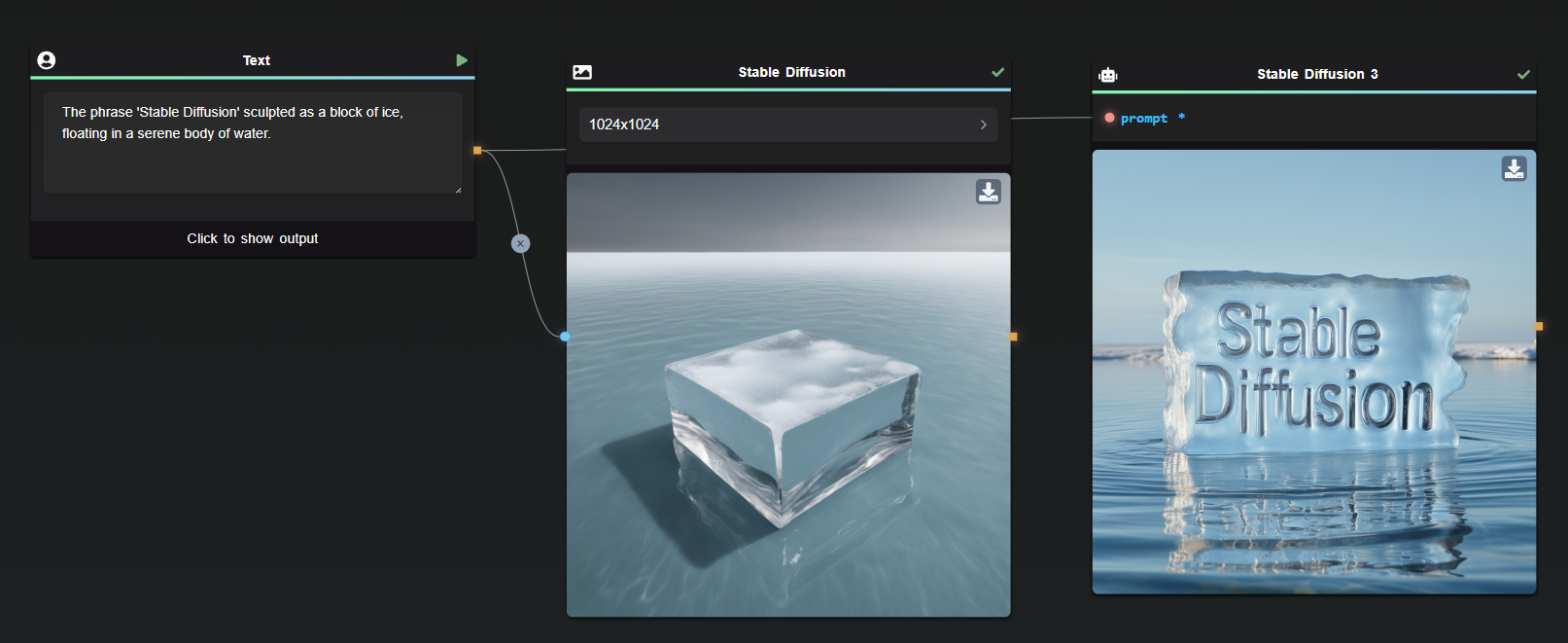

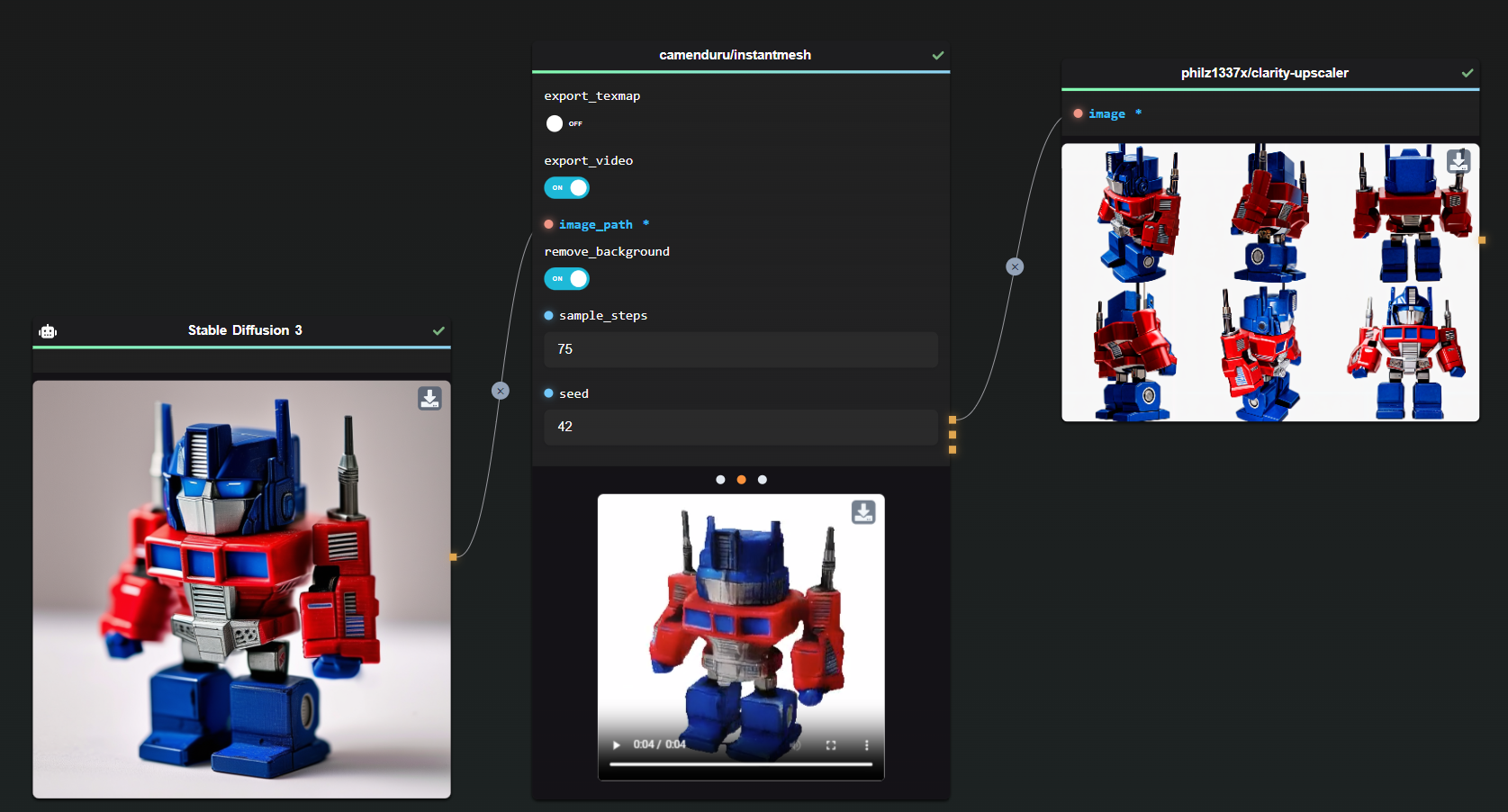

Start Using FLUX 1.1 Pro in Your Workflows with AI-FLOW

AI-FLOW is a powerful platform where you can connect multiple AI models seamlessly, automate processes, and build custom AI tools without extensive coding knowledge. Whether you’re automating content creation, experimenting with various AI models, or managing data, AI-FLOW has the tools you need to streamline your projects.

You can easily experiment with FLUX 1.1 Pro by using the Replicate Node in AI-FLOW. Simply drag the node into your workflow and start generating stunning images in seconds.

Ready to Transform Your Projects with FLUX 1.1 Pro?

Get started for free and explore the potential of FLUX 1.1 Pro by visiting AI-Flow App. Unleash your creativity and take your projects to the next level with the power of AI-driven image generation!

Additional Resources

For more detailed information, refer to the following resources: