Unleashing the Power of AI Workflow with API Builder Nodes

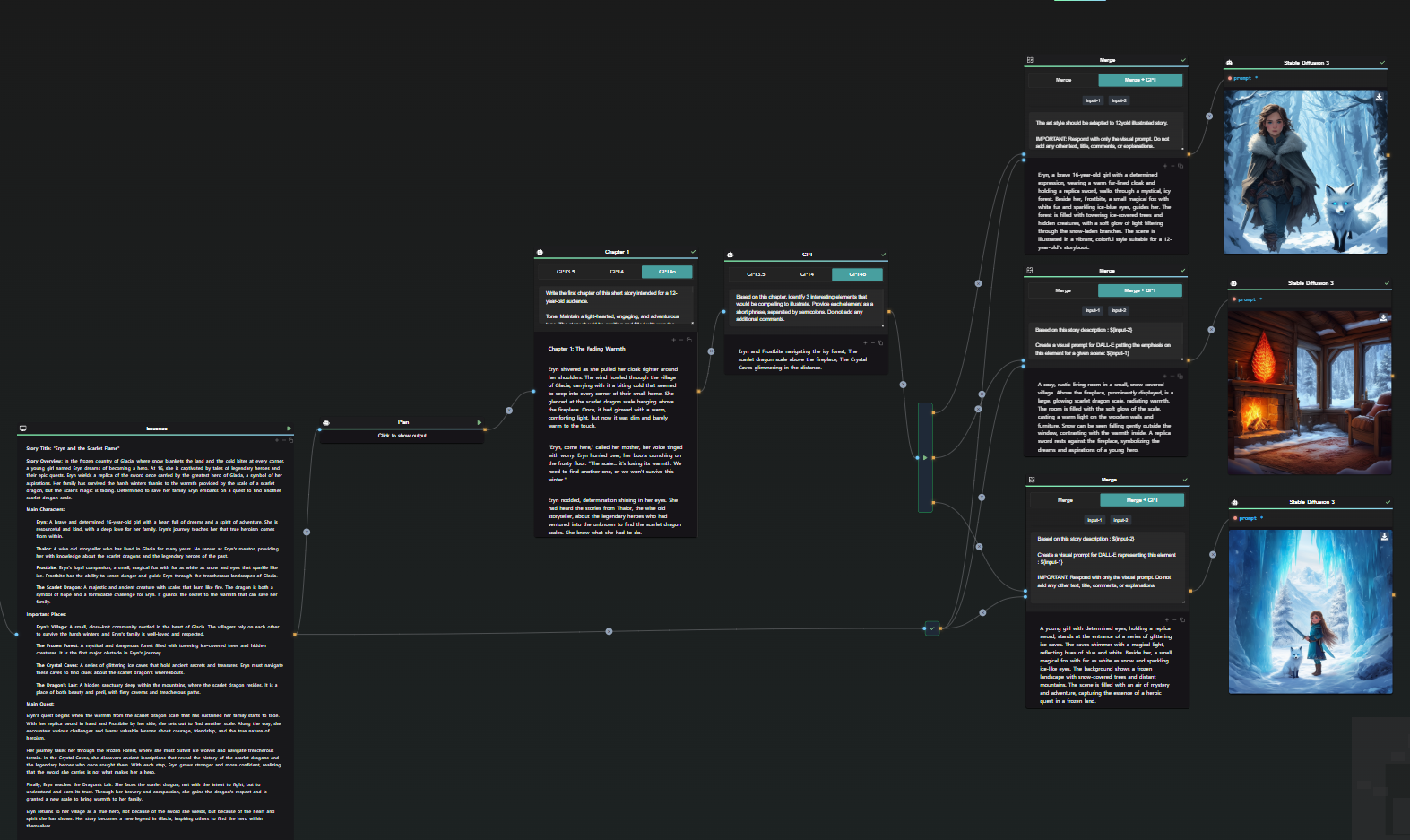

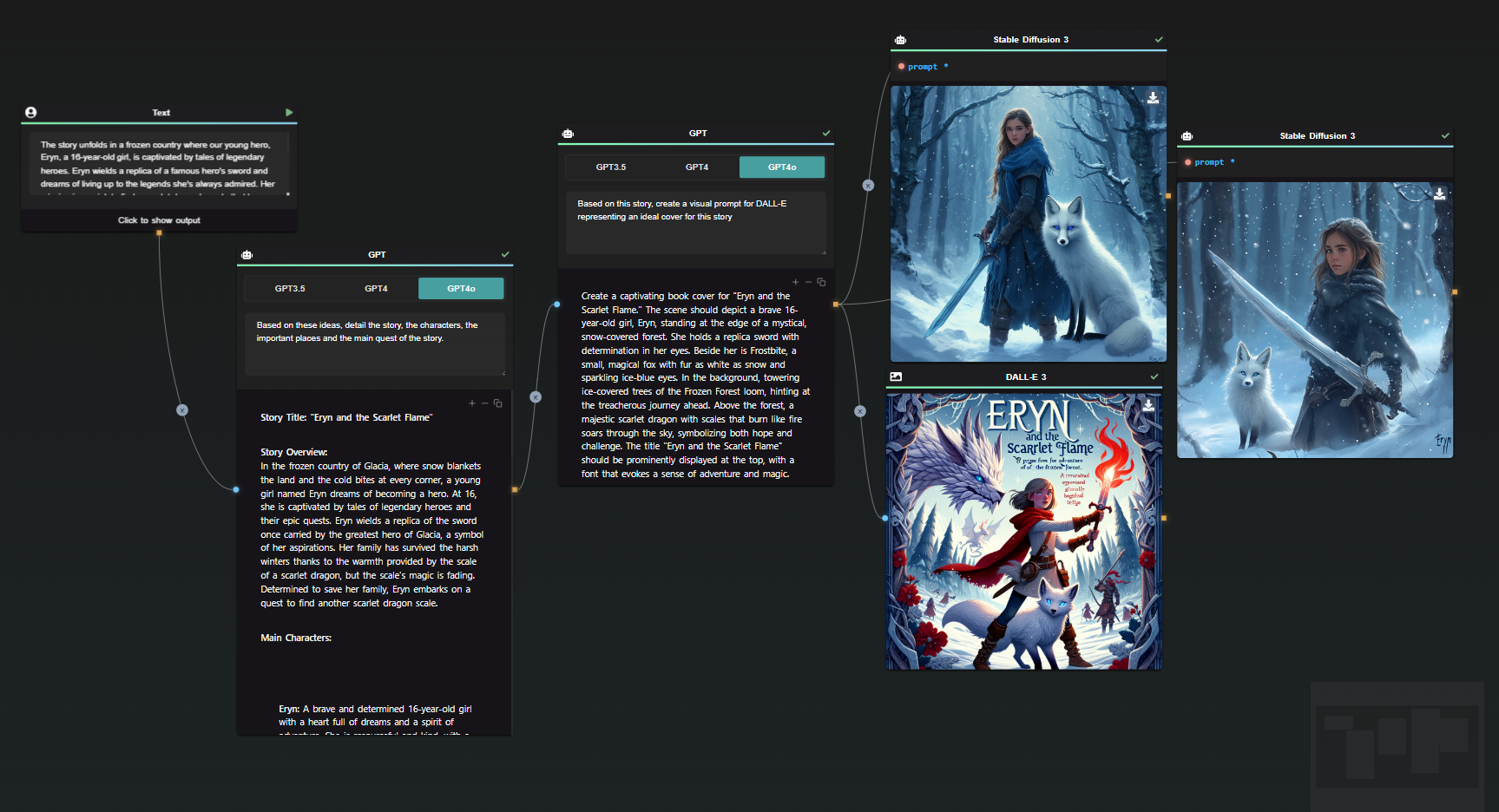

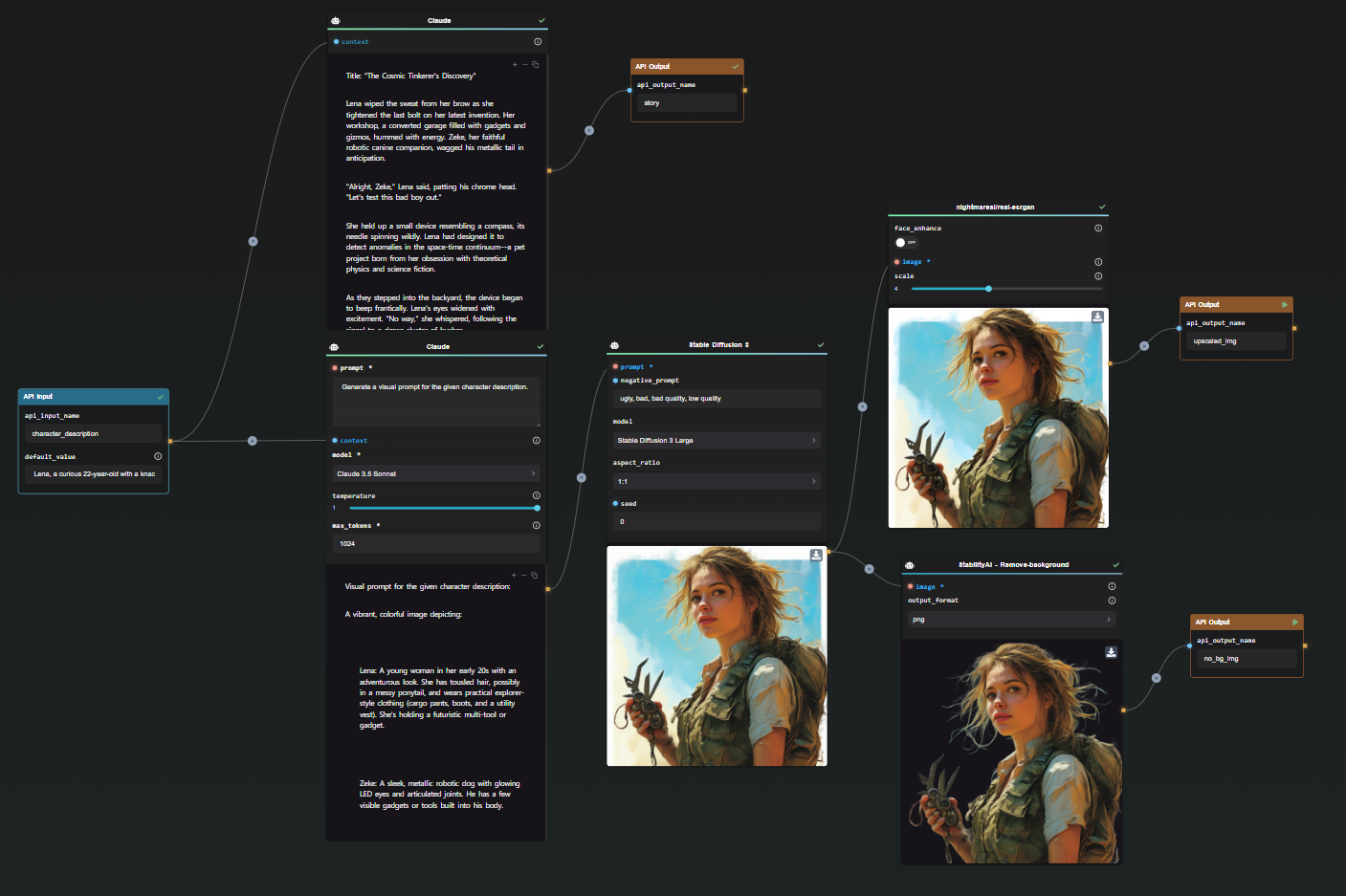

Streamlining and integrating AI workflows is now more accessible with the advanced capabilities of the AI-Flow API. By leveraging the API Builder, developers can create robust AI flows, ensuring seamless integration and interaction between various AI models like GPT, DALL-E, Claude, Stable Diffusion, or any Replicate model. This article delves into the core features of the AI-Flow API Builder, demonstrating its benefits and ease of use.

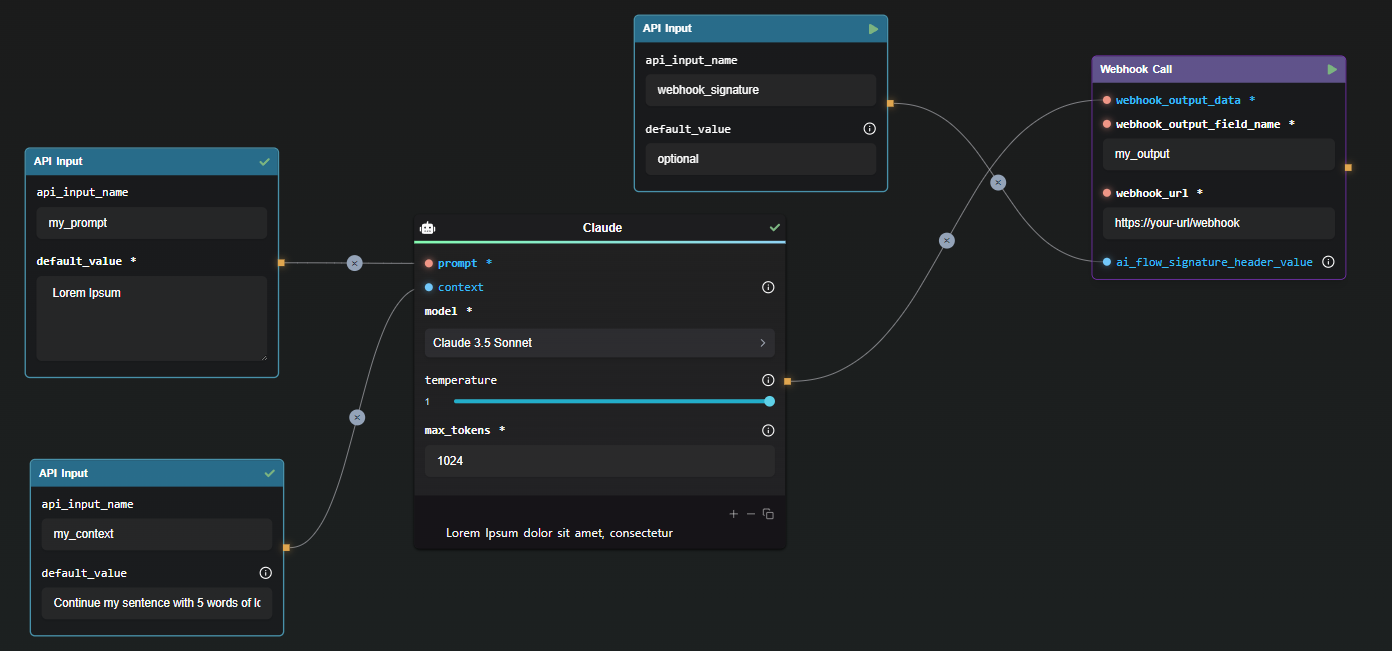

Streamline Your AI Flow with API Input and Output Nodes

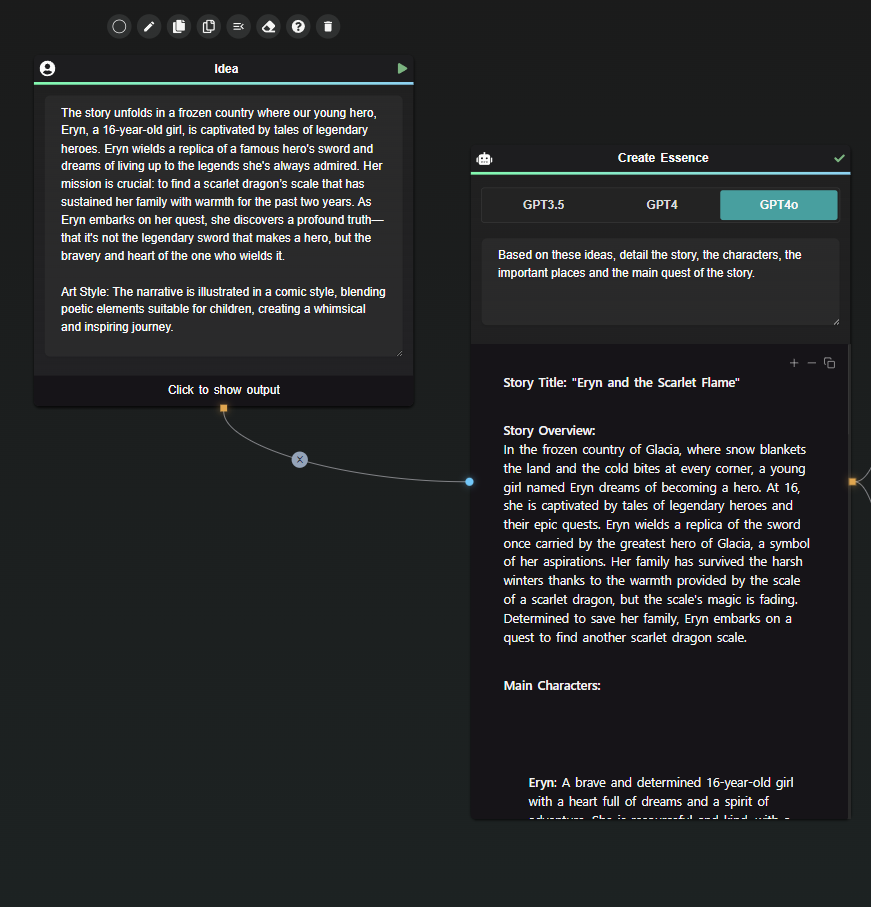

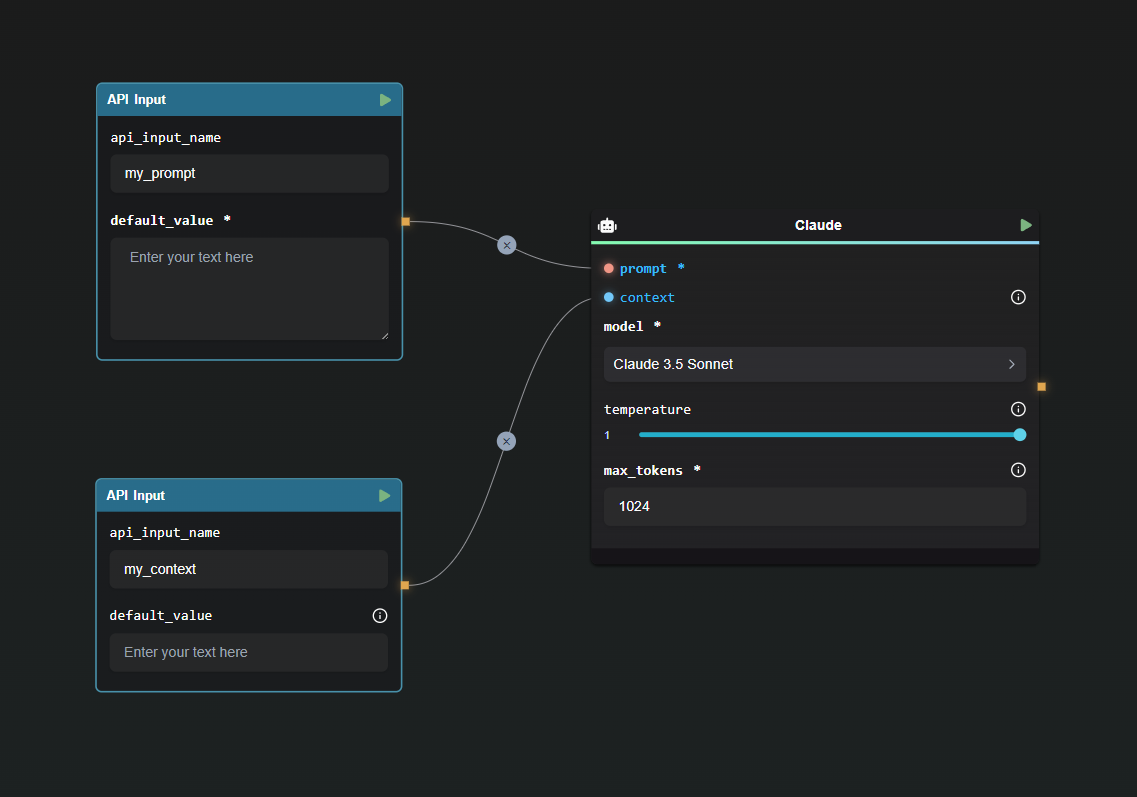

API Input Node: The API Input Node is designed to define the inputs for your API, mapping each field in the request body to a corresponding node in your flow. By setting default values, developers can make certain parameters optional.

Example Configuration:

{

"my_prompt": "Lorem Ipsum",

"my_context": "Lorem Ipsum"

}

This configuration showcases how inputs are structured, making it straightforward to initiate the flow with clear, defined parameters.

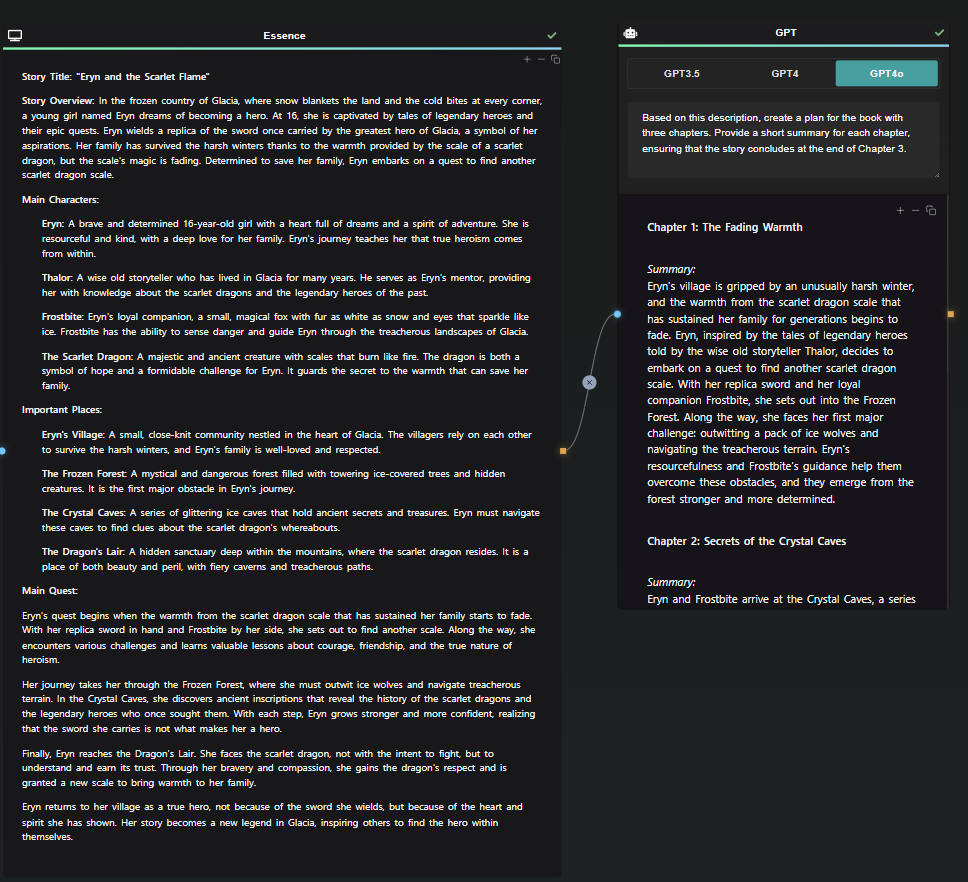

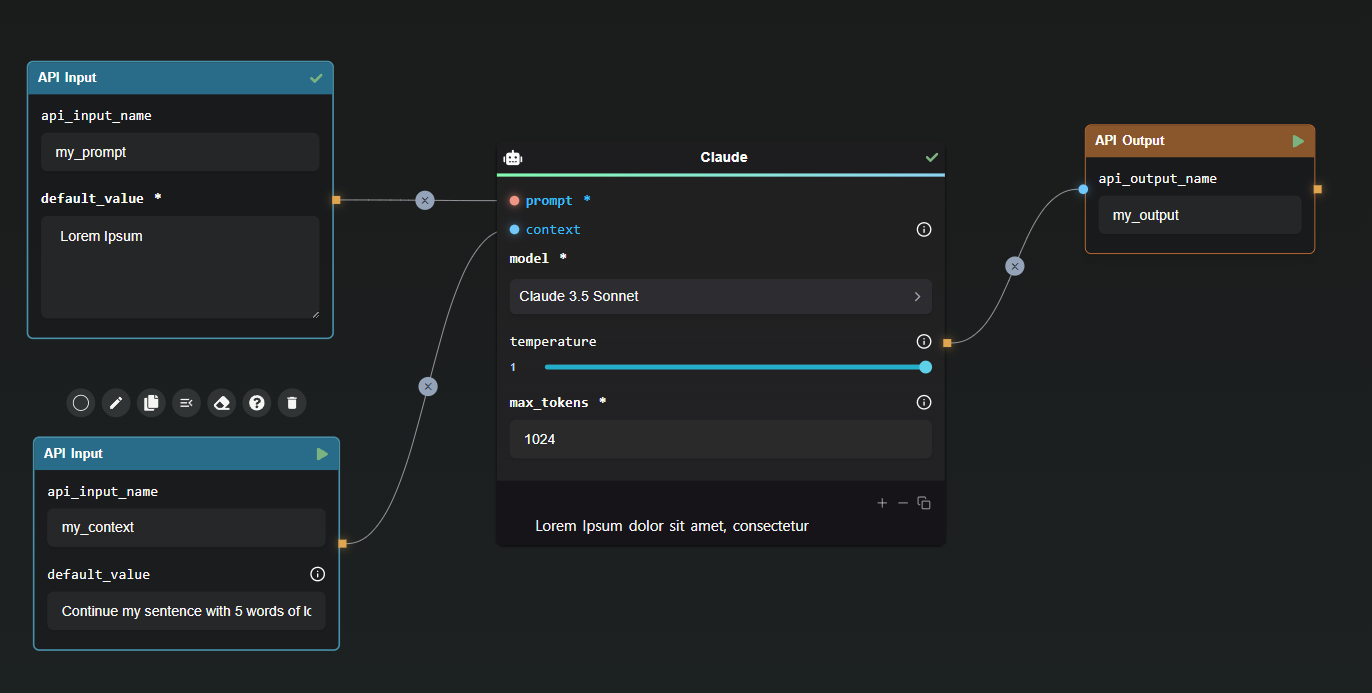

API Output Node: Configuring the API Output Node is very simple. This node specifies the names of the fields in the final response, ensuring the output is structured and understandable. Multiple output nodes can be set to pass additionnal or intermediate results.

In this simple example, the API response will be formatted as followed:

{

"my_output": "Lorem Ipsum dolor sit amet, consectetur"

}

This example demonstrates the simplicity of output configuration, providing a clear and concise response structure.

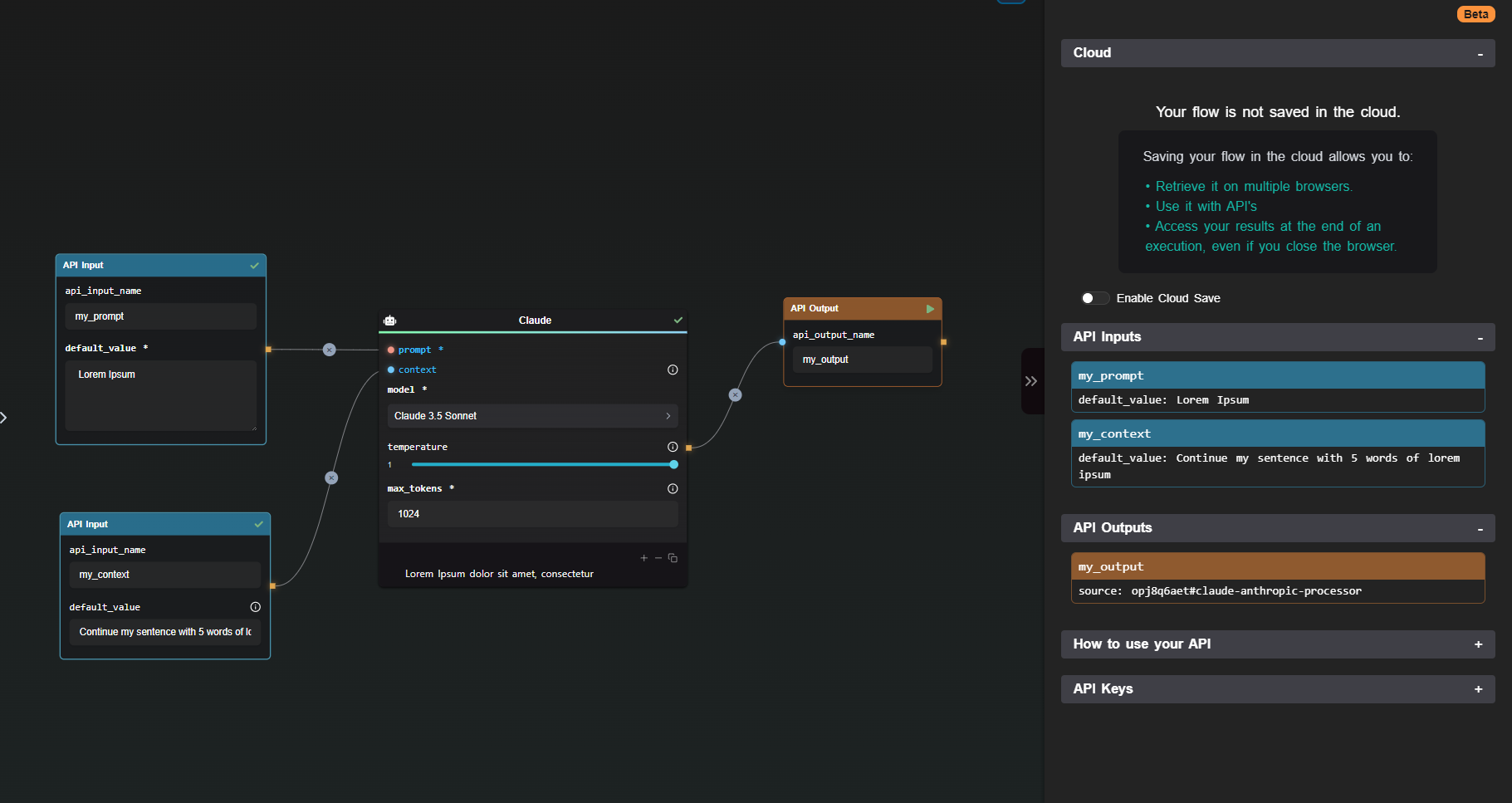

Manage and Monitor Your API with the API Builder View

The API Builder View is your command center for managing and monitoring your AI Workflow API. Accessible through the right pane of the app, this view provides a comprehensive overview of your API configuration, allowing you to generate and manage API Keys seamlessly.

Generating API Keys: To ensure secure access, API Keys are generated within the API Builder. These keys, essential for authorizing requests, are displayed only once to maintain security. Including these keys in your requests as an Authorization header is crucial for successful API calls.

Running Your Flow through the API: Launching your flow is straightforward with the provided code snippets in the API Builder View. For instance, using cURL, you can initiate your flow as follows:

curl https://api.ai-flow.com/v1/flow/<your_flow_id>/run \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $AI_FLOW_API_KEY" \

-d '{

"my_prompt": "Lorem Ipsum",

"my_context": "Continue my sentence with 5 words of lorem ipsum"

}'

This command initiates the flow, returning a run ID to track the process. Retrieve the results using this ID once the processing completes.

Enhance Integration with Webhook Nodes

The Webhook Node is a versatile tool within the API Builder, enabling you to send outputs to designated URLs. Configuring the Webhook Node involves specifying the target URL and selecting the outputs to send, with the option to include custom signatures for enhanced security.

In this case, the webhook will send the following data :

{

"my_output": "Lorem Ipsum dolor sit amet, consectetur"

}

In this configuration, the Webhook Node sends structured data to the specified URL, ensuring smooth integration and authentication via custom signatures.

Conclusion

The AI Workflow API, powered by the API Builder Nodes, offers a streamlined, efficient way to create and manage AI flows. With intuitive nodes for input and output, API management tools, and flexible webhook configurations, developers can build powerful AI workflows tailored to their needs.

Additional Resources

For more detailed information, refer to the following resources: