Image Generation Models

By leveraging leading AI models such as FLUX from Black Forest Labs, DALL-E from OpenAI, and Stable Diffusion from StabilityAI, you can seamlessly generate unique visuals from textual prompts and various other parameters.

Below, you'll find detailed information about our primary image generation nodes. However, please note that a wide variety of additional specialized models—including capabilities like inpainting, outpainting, and background removal—can be discovered and utilized through both the Replicate Node and StabilityAI Node.

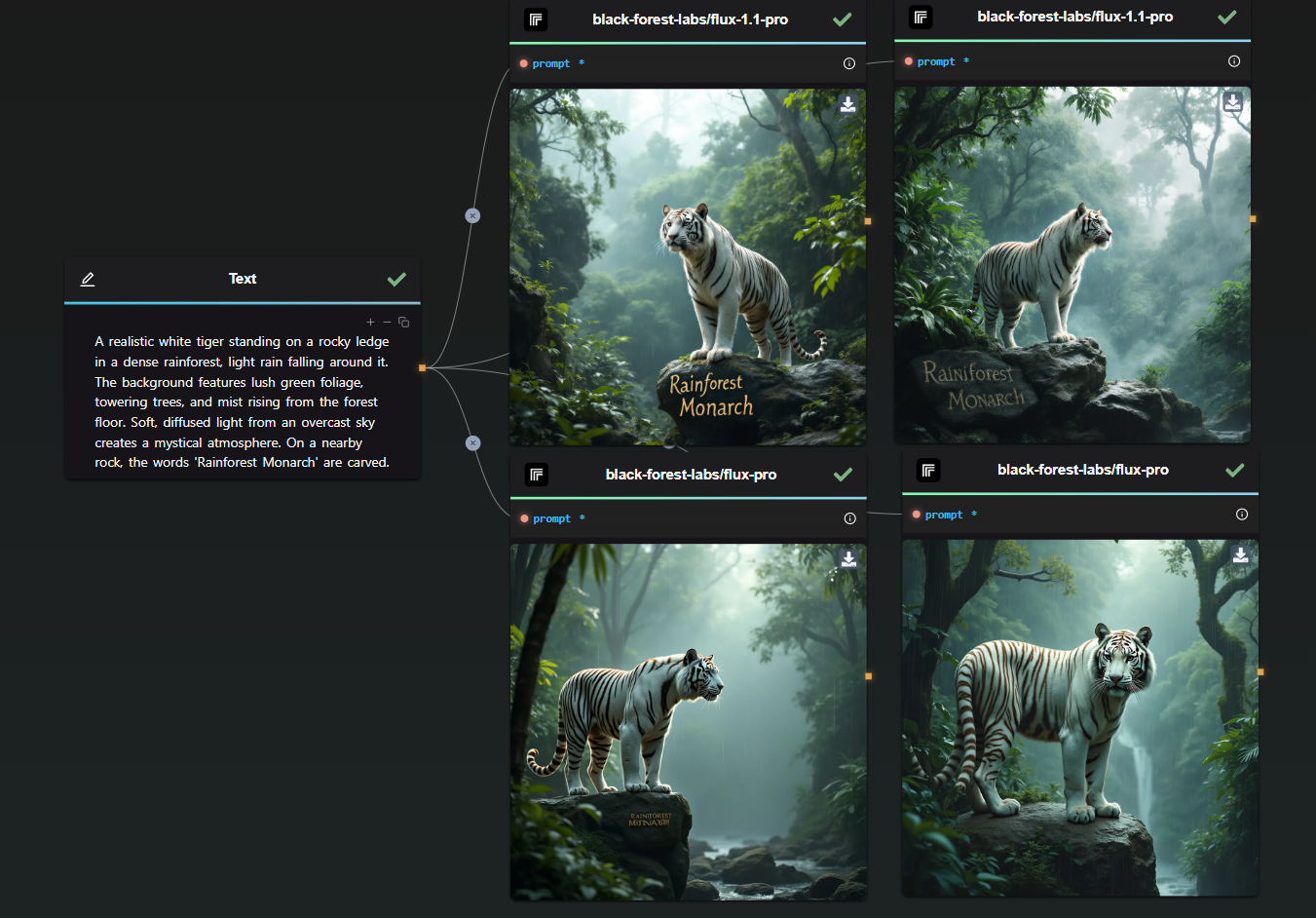

FLUX (with Replicate)

The FLUX model series, accessible via the Replicate Node, provides powerful and versatile text-to-image generation capabilities. These models allow users to effortlessly create dynamic visual content tailored to specific prompts or creative needs.

To learn more about the FLUX model, feel free to explore our article on How to Generate High-Quality Images with FLUX 1.1 Pro

DALL-E (OpenAI)

OpenAI's DALL-E Node, renowned for generating detailed and imaginative images directly from textual descriptions. This node combines advanced language processing with sophisticated visual generation, enabling users to produce accurate and imaginative visual outputs from straightforward text prompts.

Note: The DALL-E and Stable Diffusion nodes operate similarly. For detailed usage instructions, refer to the Stable Diffusion section below.

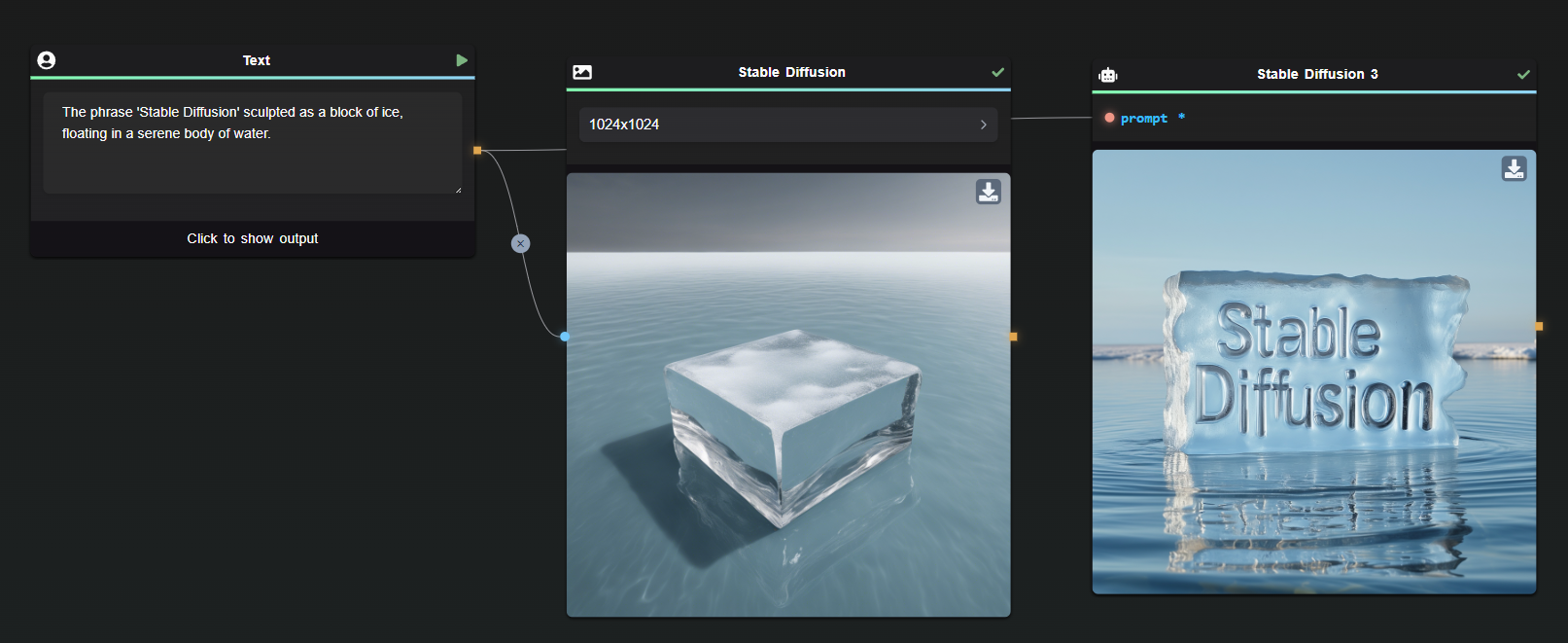

Stable Diffusion (StabilityAI)

The Stable Diffusion Node, powered by StabilityAI, provides robust and highly controlled image-generation capabilities. It allows for precise visual content creation, accommodating a wide range of needs—from highly detailed illustrations and realistic imagery to abstract visualizations. The StabilityAI Node encompasses the full StabilityAI API suite, featuring advanced functionalities such as:

- Inpainting: Editing specific portions of an image seamlessly.

- Outpainting: Expanding images beyond their original boundaries.

- Background Removal: Automatically removing or altering backgrounds.

- Relighting: Adjusting the lighting conditions within existing images.

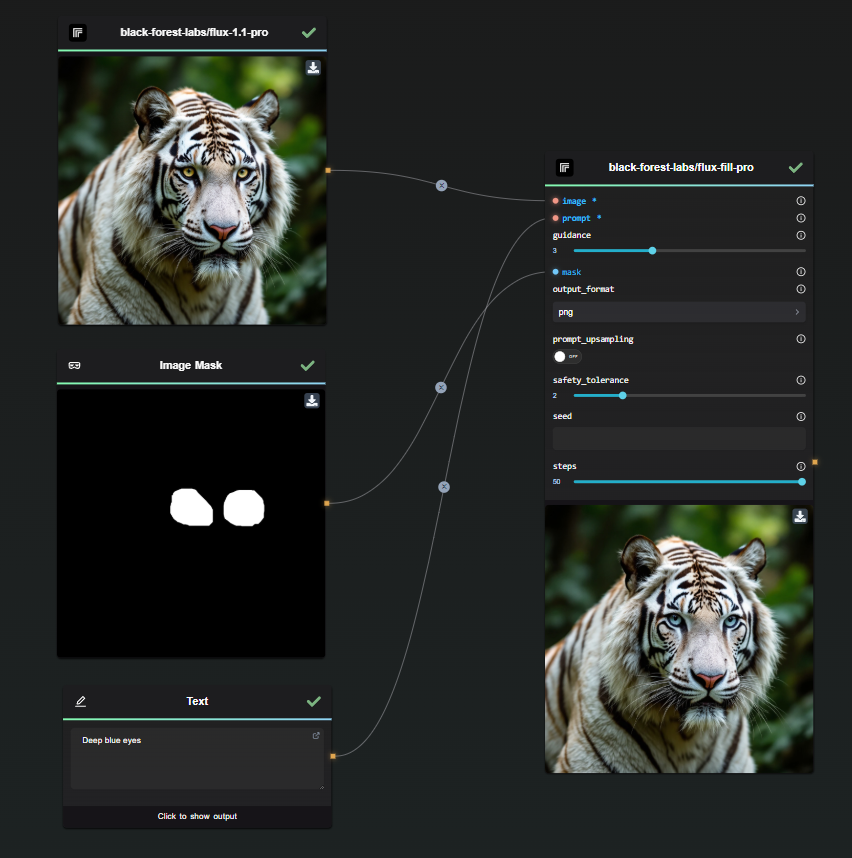

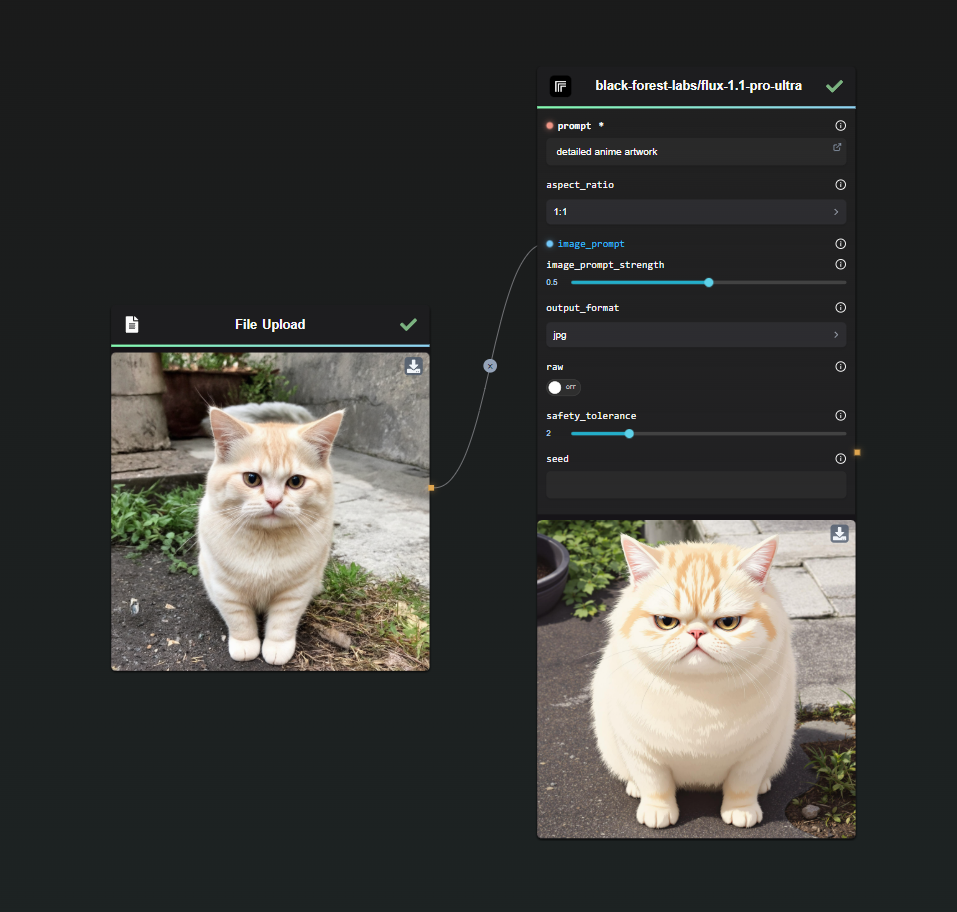

Image Editing Capabilities

Beyond primary image generation, advanced editing functionalities can be accessed through the Replicate and StabilityAI nodes. These platforms offer a rich ecosystem of specialized models tailored for tasks such as:

- Inpainting & Outpainting: Precisely edit or expand visuals.

- Background Manipulation: Efficiently remove or modify image backgrounds.

- Image Refinement: Enhance and fine-tune visual quality through relighting and other image adjustments.

- Restyling: Apply another style to an existing image.

To learn more about this, feel free to explore our articles on Inpainting with FLUX Fill Pro or Restyling with FLUX 1.1 Pro Ultra

Explore the variety of models available within Replicate and StabilityAI nodes to fully harness the potential of AI-driven image generation and editing in your workflows.